Grok Unveils AI Video Generator with Controversial “Spicy Mode” Feature

August 5, 2025

Graphic Design Tips for Creating Scroll-Stopping Social Media Posts

August 8, 2025OpenAI & NVIDIA Launch Optimized GPT-OSS Models for Next-Gen AI Applications

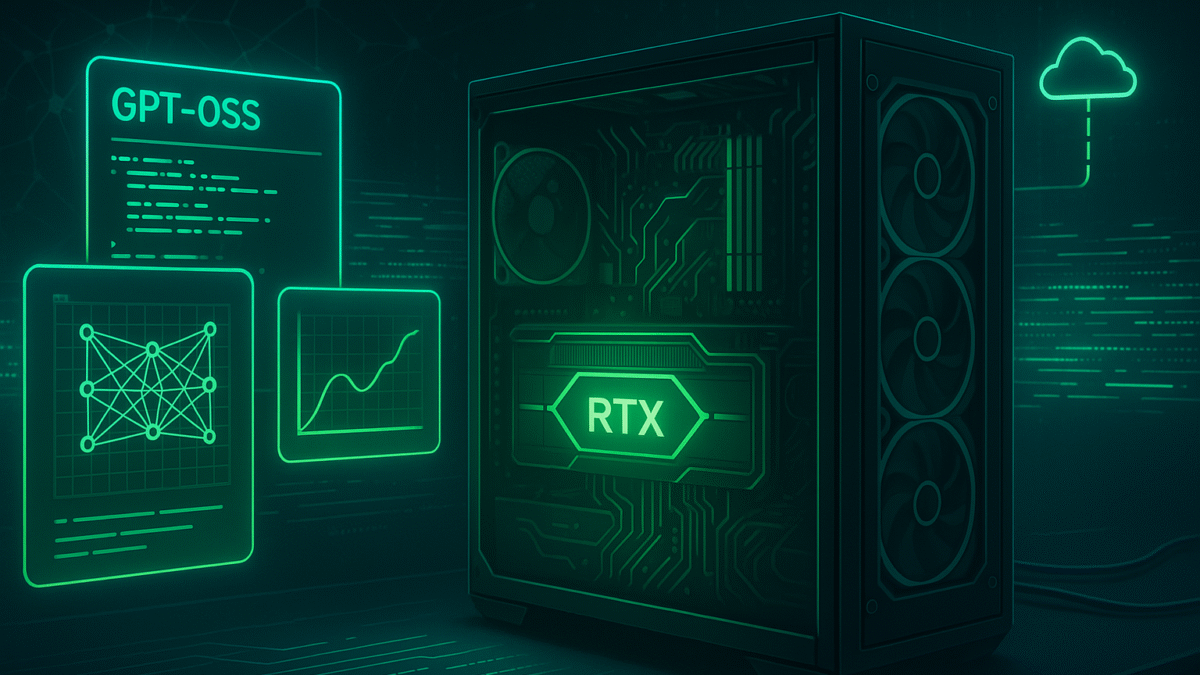

OpenAI and NVIDIA have joined forces to release high-performance, open-source reasoning models — gpt-oss-20B and gpt-oss-120B — optimized specifically for NVIDIA GPUs. These advanced models bring intelligent and fast inference capabilities from the cloud right to local devices, enabling a new era of agentic AI for tasks such as web search, deep research, coding, and more.

Blazing-Fast Performance Across Devices

Now accessible to developers and AI enthusiasts worldwide, the gpt-oss models are fully optimized for NVIDIA RTX AI PCs and workstations, with performance benchmarks reaching up to 256 tokens per second on the GeForce RTX 5090 GPU. Users can take advantage of this power using popular tools like Ollama, llama.cpp, and Microsoft AI Foundry Local.

“OpenAI showed the world what could be built on NVIDIA AI — and now they’re advancing innovation in open-source software,” said Jensen Huang, CEO of NVIDIA.”

Smarter, Flexible, Open-Weight AI Models

The gpt-oss-20B and 120B models are open-weight reasoning models built with a mixture-of-experts architecture. They support:

1. Chain-of-thought reasoning

2. Adjustable reasoning depth

3. Long-context processing up to 131,072 tokens

4. Instruction following

5. Tool usage

6. High-efficiency MXFP4 precision for enhanced speed and lower resource consumption

These capabilities make them ideal for a variety of tasks including:

✅ Web and document search

✅ Intelligent coding assistance

✅ Complex research workflows

✅ Large-scale local inference

Run GPT-OSS on Your RTX PC with Ollama

Ollama is the easiest way to test these models on RTX GPUs (with 24GB+ VRAM). With its new user-friendly UI and seamless integration, you can:

Chat instantly with GPT-OSS models

Upload PDFs and text files for direct interaction

Use multimodal features with image support

Customize context length for large documents

Access SDK or CLI integration for developers

Ollama is already fully optimized for RTX — no manual setup or tuning needed.

Other Tools to Run OpenAI Models on RTX

Developers can also use the models via:

llama.cpp and GGML with CUDA Graphs optimizations

Microsoft AI Foundry Local, using ONNX Runtime with CUDA, and soon TensorRT support for top-tier local inferencing

These tools make it possible to run GPT-OSS models even on RTX GPUs with 16GB VRAM, giving broader access to AI innovation.

Join the AI Innovation Wave

The release of these models marks a major milestone in open AI development, unlocking powerful tools for developers building intelligent agents, productivity apps, creative tools, and research assistants — all on local RTX-powered machines.

🧠 Follow the RTX AI Garage Blog Series for updates, tutorials, and use cases.

🌐 Stay connected via NVIDIA AI PC’s presence on Facebook, Instagram, TikTok, X, and Discord.

📩 Subscribe to the RTX AI PC newsletter to never miss an update.